Assessing the quality and uncertainty of in-situ seismic investigation methods

ABSTRACT

Design in New Zealand is increasingly requiring parameters obtained from in-situ seismic testing for use in modelling and assessment of the built environment. In New Zealand shear wave velocity (VS) profiles are most commonly required. However, the quality of in-situ seismic testing can vary substantially, and it is uncommon that realistic estimates of uncertainty are provided. As such, there is a need for guidance on the advantages and limitations of the commercially available in-situ investigation methods, how to procure investigations, and how to assess the quality of the investigation results. This paper presents some of the key factors that should be accounted for in the acquisition, processing and interpretation of in-situ seismic investigation methods. Approaches that can be taken to demonstrate the uncertainty associated with various seismic investigation methods are discussed, including discussion related to the potential inaccuracies inherent in some commonly used approaches. Lastly, recommendations for the level of information that should be provided as part of the reporting for these methods are presented.

Introduction

Historically, the use of in-situ seismic testing appears to have played a relatively minor role in geotechnical site characterisation in New Zealand outside of large facility projects such as dams and pipelines. Following the 2010-2011 Canterbury earthquake sequence, increased awareness of the importance of site response and liquefaction hazard has resulted in an increased demand for in-situ seismic testing throughout New Zealand to characterise both shear wave velocity (VS), and to a lesser extent compression wave velocity (VP).

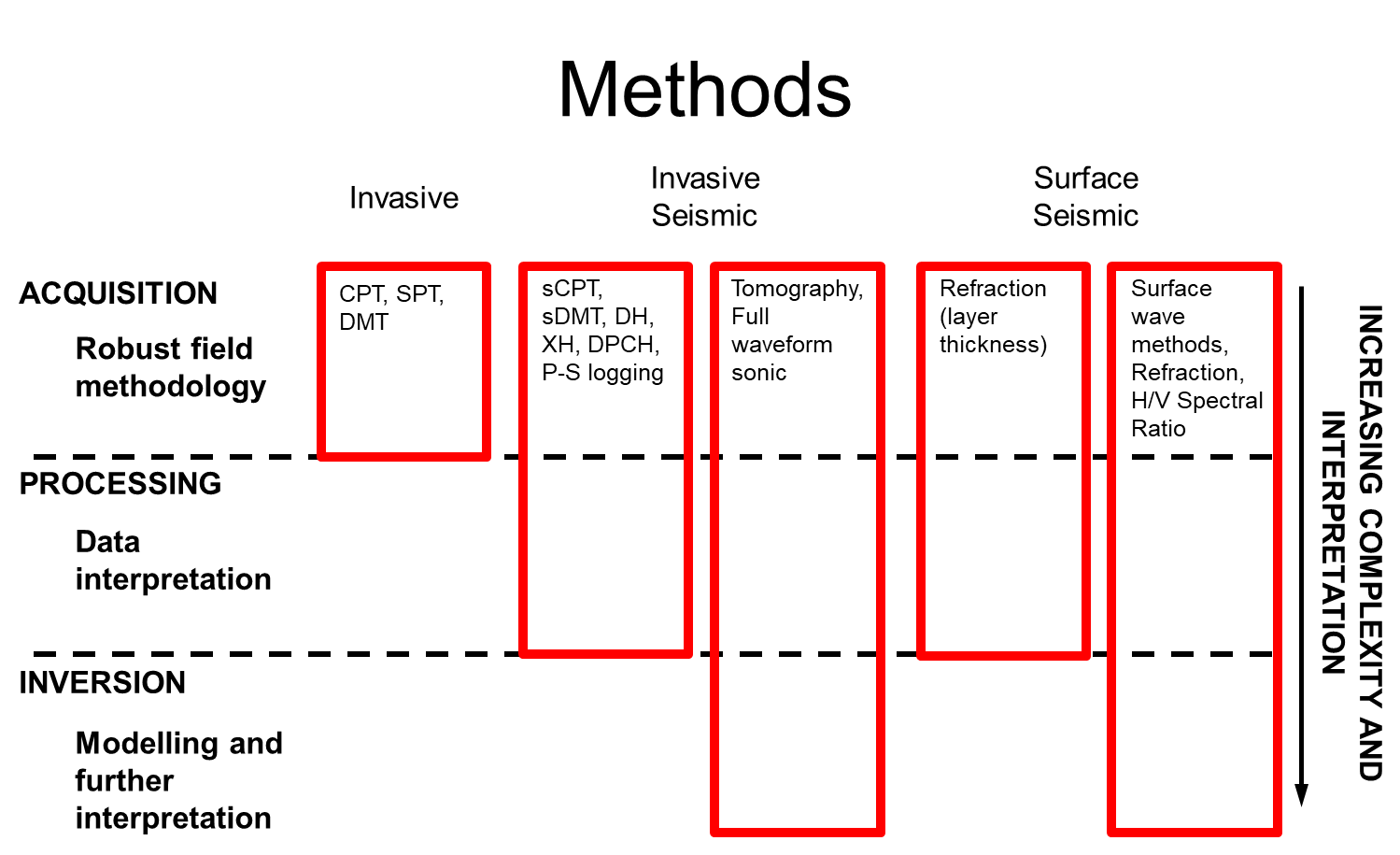

A summary of a range of traditional and seismic site investigation methods are provided in Figure 1. Traditional invasive site investigation methods include the cone penetrometer test (CPT), the standard penetration test (SPT), and the dilatometer test (DMT). These involve either the advancement of a probe into the ground by mechanical means using a string of rods, or testing in intervals down a borehole. Invasive seismic methods also require the advancement of a probe or boreholes to locate sensors for testing below the ground surface. Surface seismic methods, or non-invasive methods, do not advance any sensors below the ground surface, with all equipment located at ground level.

Figure 1: Summary of various seismic investigation methods and the steps required to produce seismic velocity data for each. Abbreviations are discussed in the body of the text.

Figure 1 summarises the key steps that are required for each method in order to output the parameters required to inform engineering design. All methods require the acquisition of data through a robust field methodology, with seismic methods requiring the processing of data into a format that enables interpretation and the development of design parameters. Some methods require the additional step of inversion, involving the use of modelling and further interpretation. The increased use of in-situ seismic testing has highlighted a general lack of understanding amongst contractors and engineers of some of the key aspects of these methods, related to all these key steps, and involves both contractors and engineers. The main issues are:

- Incorrect field acquisition methodology

- Incorrect processing and inversion – a key issue for surface seismic methods

- Lack of understanding of the limitations of different methods

- Lack of any acknowledgement of the uncertainty of the methods and its quantification

This paper presents some of the key factors that should be accounted for in the acquisition, processing and inversion steps of in-situ seismic investigation methods. Approaches that can be taken to demonstrate the uncertainty associated with various seismic investigation methods are discussed. Recommendations for the level of information that should be provided as part of the reporting for these methods are proposed. Here we focus on the invasive and surface seismic methods that have most widespread use in NZ to define VS profiles. For invasive methods, the focus is downhole methods, while for surface seismic methods, the focus is active source methods.

Invasive methods

Invasive methods are based on the propagation of body waves from a known source location to a receiver (or receivers). Sources of various forms are used to create compression waves (P-waves) or shear waves (S-waves), which can be recorded by receivers that measure either acceleration (accelerometers) or velocity (geophones). Downhole methods use a source located at the ground surface and receivers that are advanced to a range of different measurement depths using a single borehole/probe. Methods typically used in New Zealand are the borehole-based downhole (DH), the seismic CPT (sCPT) and the seismic DMT (sDMT). Crosshole methods uses a source and receiver in separate boreholes/probes located at a common measurement depth, which are then be advanced to a range of different depths. Crosshole methods typically used in New Zealand are the borehole-based crosshole (XH) and the direct push crosshole (DPCH). A summary of these methods is provided here, and more details are provided in Wentz (2019).

Downhole Methods

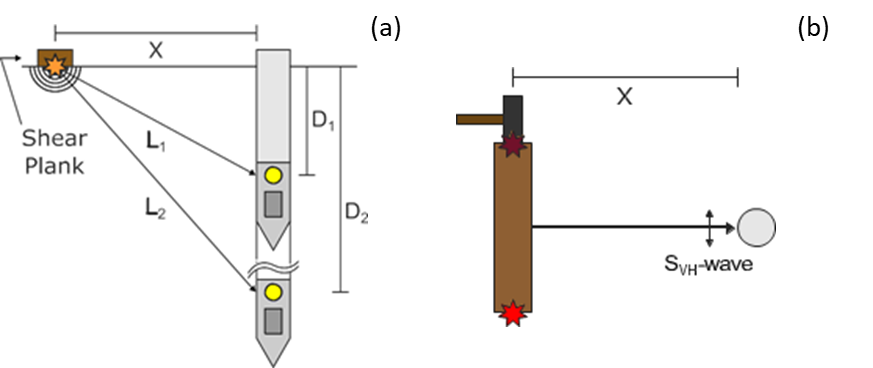

A schematic of the field setup for the downhole methods (DH) is presented in Figure 2, and the general procedures for these methods are outlined in the relevant ASTM test standards (ASTM 2017). DH methods use a source offset (X) a short distance from the location of the borehole/probe at the ground surface (1-3 m is recommended), and receiver(s) at depth. For VS testing, impacts on the ends of a shear plank oriented perpendicular to the offset dimension from the borehole/probe should be used. This creates downward propagating, horizontally polarised shear waves (SVH wave), with Figure 2 showing one possible ray path for each receiver location, which represents the direct wave from source to receiver. The impact on the shear plank triggers the start of the data acquisition system (DAQ), and this is recorded by receiver(s) orientated horizontally to record the horizontal particle motion. To develop a VS profile this setup is repeated with the receiver(s) located at a range of different depths (D1 and D2).

Figure 2: Schematic of the setup for downhole test, with a single receiver shown for two different test depths (a) side view; (b) plan view

For all methods, good coupling is required between the ground and the borehole/probe. As probes are pushed into the ground directly, coupling is usually good. The success of borehole methods relies on good grouting between the borehole casing and surrounding soil, otherwise wave propagation will be poor. This is a key aspect of the field step and care should be taken to ensure a good grouting methodology.

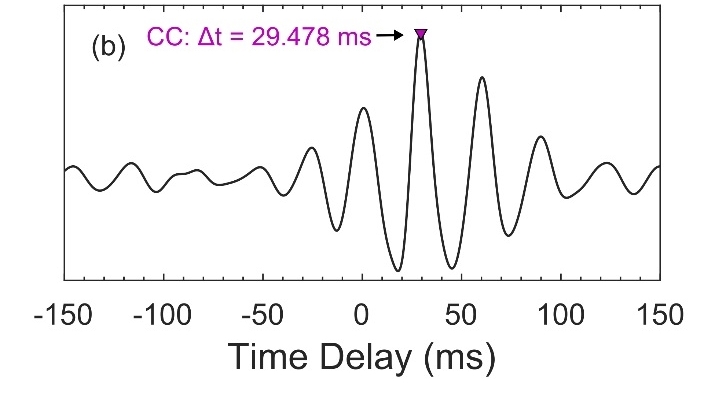

At each test depth, the orientation of the receiver(s) should be known, and the initial polarity of the shear wave that is being generated should be known. The initial polarity is the initial voltage departure, either positive or negative, recorded by the DAQ for a given impact direction on the shear plank. By hitting on each end of the plank, reversed polarity waves can be generated at each test depth, and plotting these together should create traces where the shear wave arrival diverges as a mirror of each other (‘butterflied’ traces as shown in Figure 3(a)). Multiple impacts should also be used at each test depth and the recorded waveforms stacked (superimposed) in order to develop a consistent clear waveform. These approaches all greatly simplify the data interpretation as discussed in the next section.

For DH methods it is typical to assume a straight line ray path from the source to the receiver(s), as shown in Figure 2. This straight line assumption is not always correct, and refraction as waves move from one soil layer to the next will bend this path, particularly in the top 3-5 m of the soil profile. As a result the interpreted velocities in this range should be treated with caution (Stolte & Cox 2020).

In a New Zealand context, the majority of sCPT use a single receiver setup, with a single receiver package located above the cone tip. This setup is used to record wave arrivals at the receiver location at each test depth using a separate source impact, and the difference in wave arrivals from one depth to the next is used to calculate a VS for that test depth interval. This is referred to as the pseudo-interval method, and in Figure 2 D1 and D2 would be two separate test setups. As triggering of the DAQ can be inconsistent, particularly in lower costs systems, errors can be introduced into this method, as the time zero for each separate test depth record can shift, meaning the wave arrival times are not a true representation of the propagation. A good practice is to record the trigger as part of the data acquisition, so this effect can be removed. However, this is not routinely done in practice.

All sDMT setups and a small number of sCPT use a dual receiver setup, with two receiver packages located a fixed vertical distance (0.5 to 1.0 m) from each other above the cone tip. This setup records wave arrivals at two receiver locations (both D1 and D2 in Figure 2) for each test depth simultaneously using a single source impact, referred to as the true-interval method. In the dual receiver system, as you are recording the wave arrivals at two locations for the same source, the triggering of the DAQ system, and therefore the zero time is the same for both records. As a result, this removes the issues related to an inconsistent trigger.

Data Processing and Uncertainty

Processing of the DH method starts with the picking of the travel times of the seismic waves, which could be the total travel time from source to receiver or the relative travel time between two receiver locations (the interval travel time). The waveform of interest is the first major departure with the correct polarity, typically associated with an increase in amplitude and change in frequency content. This waveform can be picked using multiple approaches and summarised in Figure 3. The first arrival (FA) pick is the initial arrival of the shear wave, which can sometime be difficult to identify. The first peak/trough (PT) is the first peak after the FA for one source direction and the first trough for the other source direction. The first crossover (CO) is the first point after the FA where both waveforms cross each other. When the interval travel time is required, differences in the picked arrivals at subsequent depths for a particular method are used. A final approach makes use of the peak response of the cross-correlation (CC) function between pairs of waveforms from subsequent measurement depths to define the interval travel time. Reporting should clearly indicate what approach has been used, and the picks shown to enable a visual inspection of their quality.

Once travel times have been picked, there are multiple methods to process DH data to develop VS profiles with depth. The first is the interval velocity method (ASTM 2017), which uses the interval travel time between two receiver depths, and the difference in the travel path length from source to each receiver, defining the VS of the deposits between the receiver depths. As mentioned previously, triggering issues can introduce errors when using pseudo-interval data. This manifests as fluctuations in the VS profile that are typically not representative of reality.

The second is the slope-based method (Patel 1981, Kim et al. 2004, Redpath 2007, Boore and Thompson 2007) that converts the total travel time to an equivalent vertical travel time and plots this against depth. Linear trends are then fit to groups of points to define velocities for different layers within the profile, constrained using clear changes in the slope or soil layer boundaries identified by CPT data. As a result, this approach is able to average out some the effects of triggering issues.

Clearly there is a range of approaches that can be taken to process a DH dataset, and this allows for an assessment of the uncertainty in the VS profile, representative of the epistemic uncertainty. To demonstrate this, a range of VS profiles developed from a single dataset that has been processed using multiple travel time picking and processing methods is presented in Figure 4. The true-interval (TI) and pseudo-interval (PI) methods have the largest differences across the different picking methods, with the slope-method (SM) have much more consistent across picking methods. The PT and CO approaches have quite consistent VS estimates, while the FA and CC methods show some outliers. It is therefore important for contractors and engineers to acknowledge that this uncertainty is present, and to take steps to demonstrate or quantify this.

Figure 3: Examples of interval travel times calculated using (a) different picks from two depths using butterflied waveforms; (b) cross-correlation function between the positive polarity waveforms from (a) (after Stolte & Cox 2020)

Figure 4: Comparison of VS profiles developed using a range of travel time picking and processing methods (a) pseudo-interval; (b) true-interval; (c) slope method (after Stolte & Cox 2020)

ACTIVE SOURCE SURFACE WAVE methods

Active source surface wave methods are based on the propagation of surface waves, called as such as they travel along the surface of a medium, here the ground surface. In New Zealand this is most commonly referred to as MASW, multi-channel analysis of surface waves (Park et al. 1999), and the most common application is based on the propagation of Rayleigh waves. Active source methods use an excitation source at a known location and receivers at known locations to record the propagation of the Rayleigh waves and use this to determine the VS profile characteristics. Here we will focus on Rayleigh wave-based methods as they are the most common. Surface wave methods require an experienced analyst in order to provide robust outputs, and all aspects of these methods are not able to be fully discussed in this paper. These methods are described in more detail by Foti et al. (2017).

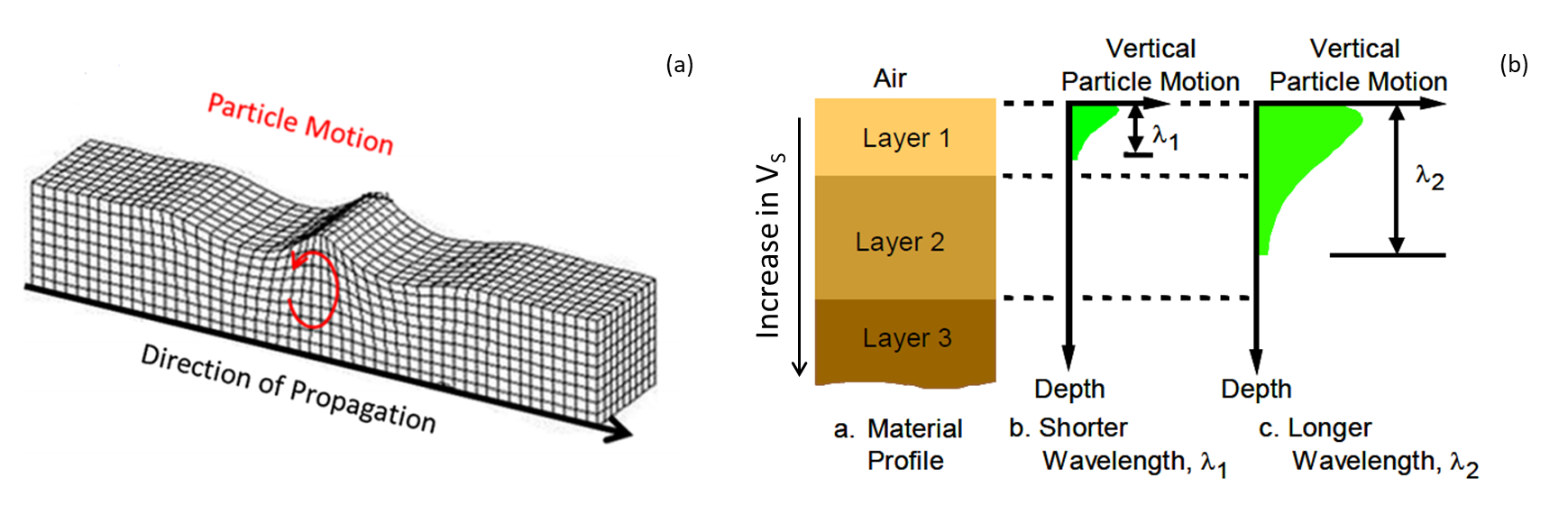

Rayleigh waves have an elliptical particle motion, meaning particle movement both in line and perpendicular to the wave propagation direction, elliptical, as shown in Figure 5(a). There are some key features of Rayleigh waves that make them useful for surface wave testing. First, two thirds of the energy generated by a vertical source on the ground surface are converted to surface waves. Second, as Rayleigh waves travel along the surface of a material, their attenuation is not as rapid as body waves. Third, Rayleigh wave velocity (VR) is similar to VS, with VS ≈ 1.1VR. Lastly, and perhaps the most important for surface wave testing, is that Rayleigh waves are dispersive in layered deposits.

The dispersive nature of Rayleigh waves means that different wavelengths (that can be related to depth) will travel at different velocities. Figure 5(b) shows a schematic soil profile with different Rayleigh wave wavelengths. The shortest wavelength has all particle motion within Layer 1, such that the VR is controlled by the properties of Layer 1 only (VS, VP and density). The longer wavelength has some particle motion within Layer1 and some within Layer 2, meaning the VR with be influenced by the properties of both layers. In a soil profile where Vs is increasing from one layer to the next with depth, this means that longer and longer wavelengths will have higher and higher VR. Therefore, if a source can create energy over a range of frequencies (remembering that velocity = frequency x wavelength), it will create Rayleigh waves over a range of wavelengths and be able to characterise over a range of depths through the soil profile.

The next sections will provide an overview of the different steps required to progress from field acquisition through to the development of VS profiles.

Figure 5: (a) Characteristics of Rayleigh waves (Foti et al. 2017); (b) schematic of the propagation of Rayleigh waves of different wavelengths

Acquisition

Active source data is collected using a linear array of sensors to record the wave propagation from the source through the array. These arrays typically consists of 24 4.5Hz vertical geophones that measure velocity variations with time, often spaced 2 m apart (46 m total array length). Good connection with the ground below is required, and this is achieved either through the use of small tripod feet on hard surfaces, or short spikes that can be pushed into grass or other soft surfaces. Active source data is most commonly acquired using a sledgehammer source impacting onto a strike plate on the ground surface off the end of the geophone array. A trigger is attached to the source to that the time of the initiation of the source can be recorded and fed into the processing. A good practice is the use of multiple different source offsets off each end of the array. This allows for identification of any lateral variability beneath the array, and to account for near source effects, which could lead to an inaccurate representation of VS. At each offset multiple impacts should be used and stacked in order to improve the quality of the signal (improving the signal to noise ratio). The outcome of the data acquisition is velocity time series data for each geophone for the range of source offsets used (Figure 6(a)). The array length used for active source testing should be approximately twice the depth of interest, with a sledgehammer and 46 m array about to characterise to approximately 20 m depth. To characterise deeper, different sources, larger source offsets, or larger geophone spacings could be used.

Figure 6: Examples of: (a) raw time series data from acquisition using 46 geophones (Foti et al. 2017); (b) experimental dispersion curve using frequency; (c) experimental dispersion curve using wavelength

Processing

The aim of processing is to extract the experimental dispersion data that is representative of the soil/rock beneath the array using the velocity time series data from each geophone location. The experimental dispersion curve relates the Rayleigh wave velocity, often referred to as phase velocity, with wavelength/frequency (all three are linked as discussed earlier in this section). There is a range of wavefield transformation methods available to convert the time series data from each geophone, representing time and space, to frequency and wavenumber. This transformation creates a surface plot relating phase velocity, frequency and energy, and the peaks in the surface at each frequency/wavelength are extracted to define the experimental dispersion curve. The range of different transformation methods will often result in comparable dispersion curve estimates, and examples are shown in Figure 6(b). The quality of the data collected will be reflected in the quality of the dispersion curve extracted. This data should be provided and the picks of the peaks representing the dispersion curves presented, as the quality of this data can be visually assessed by someone with a limited knowledge of the methods.

It is important to try to quantify the uncertainty in this experimental data, as this will have a significant influence on the inversion process. The data from multiple offsets enable the characterisation of this uncertainty. This is shown in Figure 6(b), with the mean and ±1 standard deviation of the experimental dispersion data. The extracted experimental dispersion curve data can then be used to provide an initial estimate of the bounds of velocities and depths that can be extracted in the inversion process. The minimum wavelength in the dispersion curve data is equal to approximately twice the minimum depth that can be resolved. One half to one third of the maximum wavelength can provide an estimate of the maximum depth that can be resolved. As VS ≈ 1.1VR, estimates of the VS of the surface layer can be estimated. This provides some constraint on what can be realistically extracted during the inversion process. There a number of other factors that should be assessed in the processing stage but these are outside the scope of this paper (see Foti et al. 2017). An experienced analyst is needed to be able to interpret this processed data to then inform the inversion stage of the analysis.

Inversion and Uncertainty

The aim of inversion is to develop a model of the layers of the ground that has a theoretical dispersion curve that shows a good match to the experimental dispersion curve data discussed in the previous section. An example of this is shown in Figure 7(a), comparing the experimental dispersion data with a number of theoretical ones. A model of a number of linear elastic layers over a half space are assumed, each with a thickness, density, VS and VP. A theoretical dispersion curve is calculated for the model and compared to the experimental dispersion curve to assess the fit, typically in form of a misfit function. The properties of the system are then revised in an iterative sense until a satisfactory fit is achieved. A number of different inversion approaches are possible and the key challenges are:

- The relationship between the experimental data space and the model space in nonlinear

- The solution is ill-posed, as 4 model parameters are recovered indirectly from two experimental data parameters

- As the model solution for deeper layers is dependent on the solution for shallow layers, the problem is mixed-determined.

As a result many models can fit the experimental data equal well, and rather than providing a single, deterministic VS profile for each site, the inversion process should provide a suite of theoretical profiles that fit the experimental data well and allow for a measure of the epistemic uncertainty to be reported. Hundreds of thousands of possible profiles should typically be considered in each inversion and any of the models with sufficiently good match (low misfit) to the experimental data may be representative of the velocity structure at the site.

The user defined constraints for setting up the inversion (parameterization) can have a significant impact on the VS profiles that are extracted. If there is knowledge of subsurface layering characteristics from a-priori investigation data, this should be used to help constrain the inversion and reduce the number of unknowns (range of thickness of the layers and their properties). If there is no knowledge of the subsurface layering, the parameterization should be set up to reduce the range of solutions, and also avoid over-constraint of the inversion. A systematic approach to this is summarised in Cox & Teague (2016).

An example of the effect of the parameterization is shown in Figure 7. To simplify the discussion only a single VS profile and theoretical dispersion curve is shown. The true solution is shown, which was used to develop the mean dispersion curve data. A range of models with different numbers of layers were then defined to illustrate how well they could match the true solution. The first observation is that the match between the experimental and theoretical dispersion curves for all models are quite similar based on a visual assessment. However, comparison of the VS profiles clearly shows a variation in characteristics. For models with 9 layers or less, the match to the true solution is fairly good, with a poorer match for the 3 and 5 layer models between 9 and 17 m. The clear outlier is the 20 layer solution. Even though more layers might suggest better resolution, the match is poor throughout the entire profile. Here the inversion has tried to get the best fit to the experimental data, but because so many layers have been defined, each change in slope in the dispersion curve has been captured, over-constraining the data. Clearly this must be avoided when running an inversion, as although the results has a good fit to the experimental data, it does not reflect the real VS profile. Therefore, an assessment of the quality of the inversion should look both at the fit of the theoretical data to the experimental, and a reality check of the VS profile using any geologic or geotechnical knowledge.

Figure 7: Examples of: (a) Experimental dispersion curve data with error bounds and the fit of a range of theoretical dispersion curves from the VS profiles in (b); (b) a range of VS profiles with different numbers of layers

2D Active Source Surface Wave Methods

The steps discussed in the previous section are required in order to extract a single suite of 1D profiles that are representative of the soils beneath a single geophone array setup. 2D active source surface wave methods, or 2D MASW, produces surface plots of the variation with VS with depth along a section. This is developed through the combination of 1D profiles from multiple geophone array locations, To develop the VS surface plots that represent this variability along the section, the single VS profiles from each array are interpolated between the mid-point of each array location. The key issues with this method are that all the suggested good practice approaches discussed in the previous sections are typically not put into practice, such as the use of a single source offset, no uncertainty in dispersion curve estimates and single VS profiles for each array location.

SUMMARY and Reporting REQUIREMENTS

This paper highlights some of the key aspects related to downhole and active source surface wave methods. These are very useful methods, but in order to provide robust input into design and assessment the advantages and limitations of each should be acknowledged by the contractor and understood by the engineer. In order to assess the quality of the various methods, enough information should be provided to enable someone to reprocess or interpret data quality.

All invasive method reports should contain:

- Travel time picking method

- Velocity analysis method

- Expected waveform voltage polarities for shear waves

- Waveform plots with travel time picks identified

- Tabulated travel times

- Tabulated velocity profiles

In addition to this, DH/sCPT reports should contain the source offset used during the test, while all CH/DPCH reports should contain tabulated distances as a function of depth.

- All non-invasive reports should contain:

- Details of the array geometry, source type, source locations that were used

- Processing methods/software used

- Plots of experimental dispersion data and theoretical fits on the same figure

- Plots and tables of VS profiles with clear indication of near-surface and maximum depth resolution limits relative to dispersion wavelengths and array dimensions

If layer boundaries defined from other subsurface investigation data have been used to constrain interpretation, this should also be indicated in any reporting. This helps to reduce any uncertainty in the interpretation of seismic methods, and if this data is available, this should be provided to whoever is interpreting the seismic results.

References

Cox, B. R., Stolte, A. C., Stokoe, K. H., II, and Wotherspoon, L. M., (2018). A Direct Push Crosshole (DPCH) Test Method for the In-Situ Evaluation of High-Resolution P- and S-wave Velocities. Geotech. Test. J. 42(5): https://doi.org/10.1520/GTJ20170382.

Cox, B.R. & Teague, D. (2016) Layering Ratios: A Systematic Approach to the Inversion of Surface Wave Data in the Absence of A-priori Information, Geophysical Journal International 207: 422–438.

Stolte, A.C. and Cox, B.R., (2020). Towards consideration of epistemic uncertainty in shear wave velocity measurements obtained via SCPT. Canadian Geotechnical Journal 57(1): 48-60.

ASTM International. (2017). ASTM D7400-17 Standard Test Methods for Downhole Seismic Testing. ASTM International, West Conshohocken, PA, USA.

Boore, D. M., and Thompson, E. M. (2007). On using surface-source downhole-receiver logging to determine seismic slowness. Soil Dynamics and Earthquake Engineering 27(11): 971-985.

Foti, S., Hollender, F., Garofalo, F., Albarello, D., Asten, M., Bard, P. Y., … & Forbriger, T. (2017). Guidelines for the good practice of surface wave analysis: a product of the InterPACIFIC project. Bulletin of Earthquake Engineering 16: 2367–2420.

Patel, N.S. (1981). Generation and attenuation of seismic waves in downhole testing. M.S. Thesis. The University of Texas at Austin.

Redpath, B.B. 2007. Downhole Measurements of Shear- and Compression-Wave Velocities in Boreholes C4993, C4996, C4997, and C4998 at the Waste treatment Plant DOE Hanford Site. www.pnl.gov/main/publications/external/technical_reports/PNNL-16559.pdf

Kim, D.S., Bang, E.S., and Kim, W.C. 2004. Evaluation of various downhole data reduction methods for obtaining reliable Vs profiles. Geotechnical Testing Journal 27(6): 585-597.

Wentz, R. (2019). Invasive seismic testing: A summary of methods and good practice. Quake Centre Report, Christchurch.